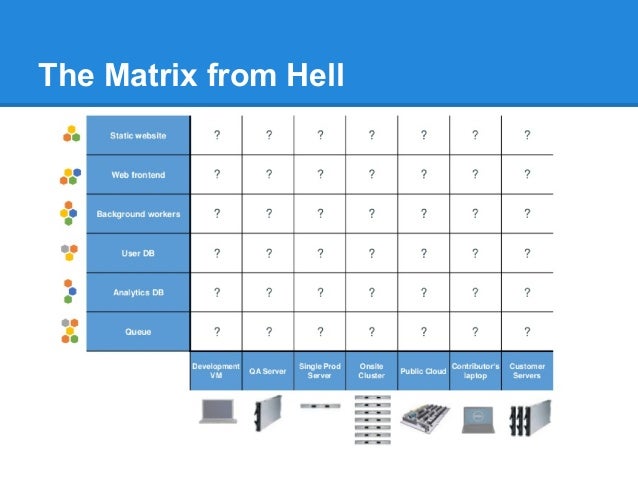

Are you dealing with the “Matrix from Hell “ situation that consumes your time in upgrading and downgrading your systems?

Matrix from Hell!, is the common word to describe to set up the development stack required to build an end-to-end application.

Problems

Dealing with web servers, databases, and other third-party tools to support your long-term projects, you need to work together on underlying Operating systems. Even though the compatibility issues are resolved for a particular version, you deal with the same problems for upcoming versions.

A new developer usually takes 3-2 months to set up his development system, adding extra time to upgrade and downgrade your frameworks to make them compatible with others.

Checking the dependencies between the libraries, compatibility checks with different browsers, and OS are the familiar tasks before any projected release, followed by patch-ups for any further reported bugs.

As your project grows, complexities increases and the entire system takes months to complete a release.

It does not matter what kind of project management methods you use, if the technology does not support your project management model, it consumes your development time for non-development tasks.

As a solution to these problems, an image of a basic setup environment was used as virtual machines. VM’s reduced the step time for developers, but the question remains, how well would the project perform in real environment conditions?

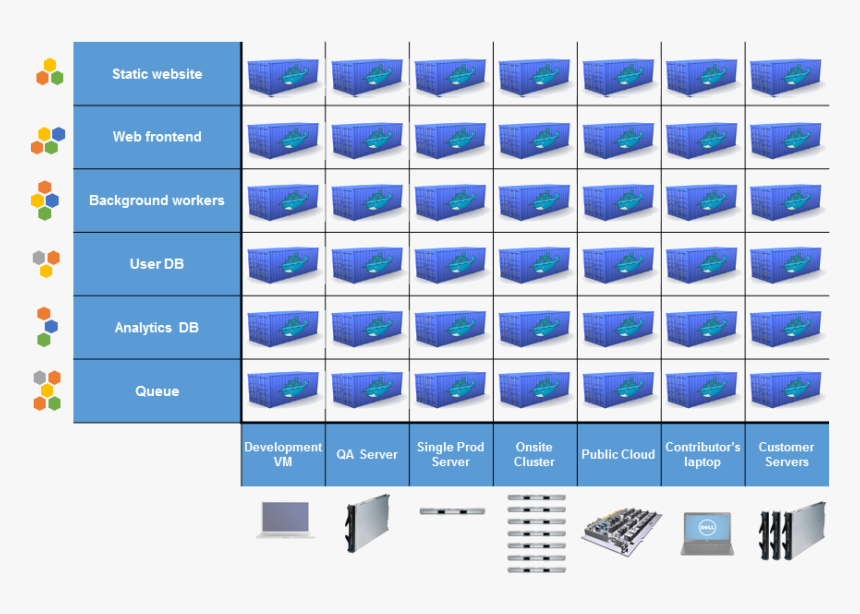

Then came the era of containers and Dockers that worked in any environment and scaled faster than VM’s.

Containers technology or Dockers work for the end-to-end stack, web server, database, messaging, are well orchestrated.

Compatibility with OS:

Certain versions are not compatible with services or are their dependent libraries. The applications and versions run overtime, Every time you change you need to versions.

Since cloud-based technology provide you with managed services for your application, these issues are not a concern, but still, there are noting points for new developers like,

1) Setting new project environment that consumes the time for setup

2) Different environments for / Dev/ AQ and / Production

3) Shipping of application from one environment to another.

These compatibility issues are always there are we need a consistent infrastructure to tackle the same.

Dockers run each component in a separate container with its libraries without affecting dependencies and its libraries. With just an installment of Docker on top of OS, the isolated environment containers are created for each service.

Using containers reduces the heavy and slow OS layer. Containers use the container runtimes. Docker, Rocket, and containerd are popular and de-facto standard container runtime.

Layer created by containers are self-containerd and applications and dependencies are independent of each other.

Working on containers solved multiple problems:

- Its portable property makes it run in all environments

- Improves efficiency since it removes the OS layers and boosts fast to create new containers.

- They have a low footprint, easily scalable, and spinning new containers is easy.

- These are light-weighted. It does not need an allocated set of resources to a container, less computing resources.

Containers are temporary, they can be destroyed and created as per requirement. These properties are handy in canary deployments, blue-green deployments, and A/B testing.

Using containers solved the problems faced for microservice architecture, but scaling or creating and destroying containers were still done manually until the Kubernetes was created to orchestrate containers.

Kubernetes:

Kubernetes manages the servers that run containers. Manual orchestration of these containers is difficult, not knowing when to scale-up and scale-down creates lots of chaos. The Kubernetes automatically runs the servers as needed and thus scales up or scales down the containers are per the demand.

As you work on Kubernetes, developers do not need to know the architecture as the containers run in any given environment. Slicing up the clusters by adding or removing them in a node is easy.

Kubernetes run on nodes. A master node controls all the nodes that are orchestrated.

Here are some features that Kubernetes performs along with orchestration:

- Communicates with runtime: The container runtime manages the containers. Kubernetes manages containers by communicating with containers runtime.

- Stores the state of configuration: Storing the metadata of clusters, configuration, and data is essential. Storing this data enables the Kubernetes to create and destroy the containers. Kubernetes uses the etcd to store the data in key-value pairs.

- Identifies expected configuration: The expected state of clusters is anticipated using configuration details of the datastore.

- Orchestrated layer for networking: Networking of pods enables them to communicate in between the servers. It uses the overlay of networks to allow communication between pods.

- Service discovery: Containers last for short time, and hence are an ephemeral resource. In case the container is destroyed, the runtime creates a new container and thus a new IP is generated. The creation of new IPs could disrupt the communication between the nodes.

Kubernetes create static IP and DNS that routes the request to the instances of containers.

Health check: Kubernetes maintains the containers and checks for their health. An unhealthy container that does not work appropriately is destroyed and a new container is created instantly.

Cloud providers for objects: Working with Kubernetes and cloud provider is easy, Kubernetes uses the cloud API’s storage for load balancing and storage. Thus a single source to run and manage all your containers and their resources.

Configuration management: OAuth tokens, secret configuration managing passwords, and SSH keys are allowed to store. These are easily updated in the application without rebuilding container images. This even maintains the confidential secrets by not exposing them to the stack configuration.

Bin packing: By stating the CPU and RAM requirements of the container to Kubernetes, it fits the containers into the nodes and creates the best use of the resource.

Service orchestration: Storage systems like public cloud providers, local storage can be automatically mounted by Kubernetes.

Take Away:

Though we define Kubernetes as an orchestration system, it gives much more functionalities than expected. It controls and compares the independent, composable control process that works towards the desired state from the current state. Kubernetes makes the system robust, scalable, extensible, and powerful. By eliminating the think layers of OS for VM, it quickly creates and destroys the containers and syncs with the traffic generated by the Microservices apps.